Echo understands Dradis finding fields, project data, and your team's standards so it can deliver the right suggestion at the right moment.

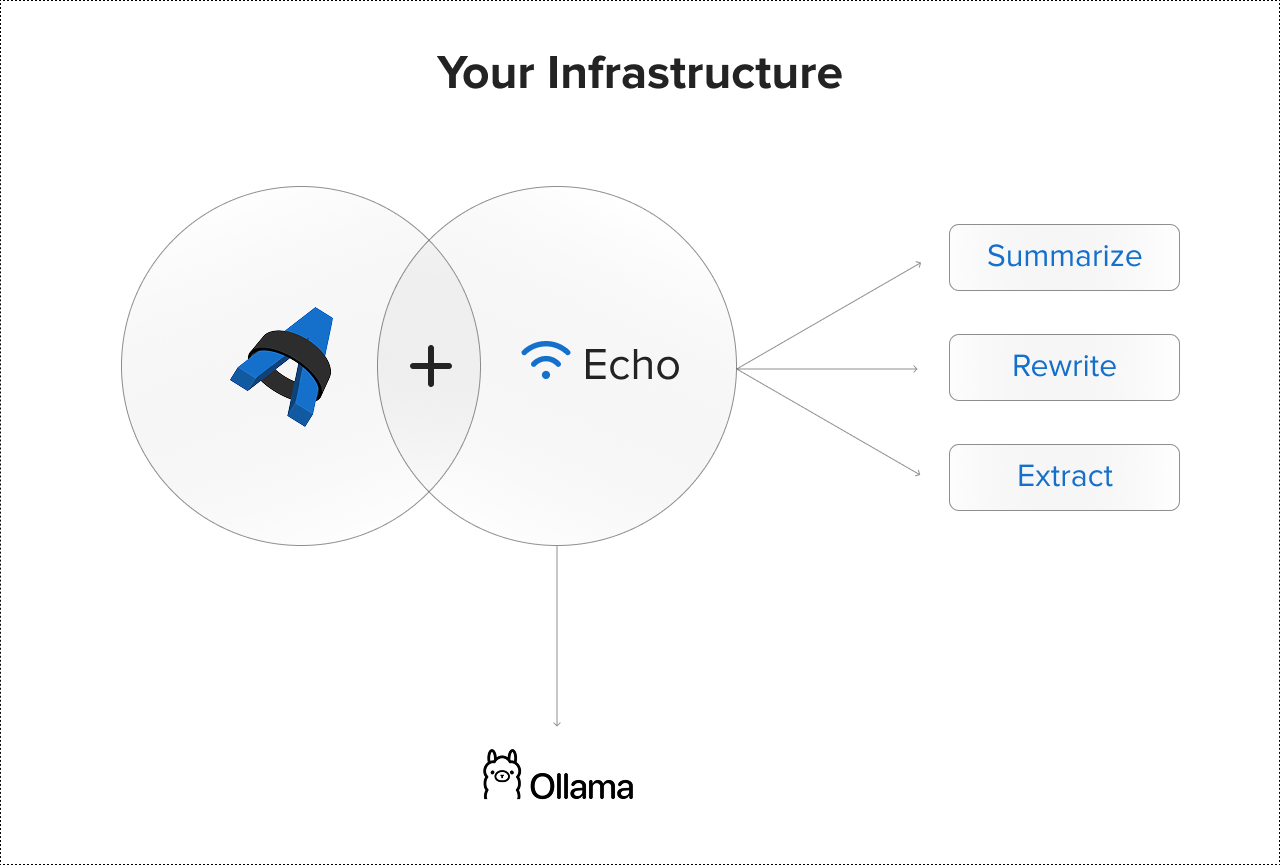

Unlike cloud-based solutions, Echo is designed with privacy, flexibility and extensibility in mind, just like Dradis:

No external APIs. No cloud processing. No third-party data handling. No training of someone else's models with your data. Your sensitive findings never leave your network.

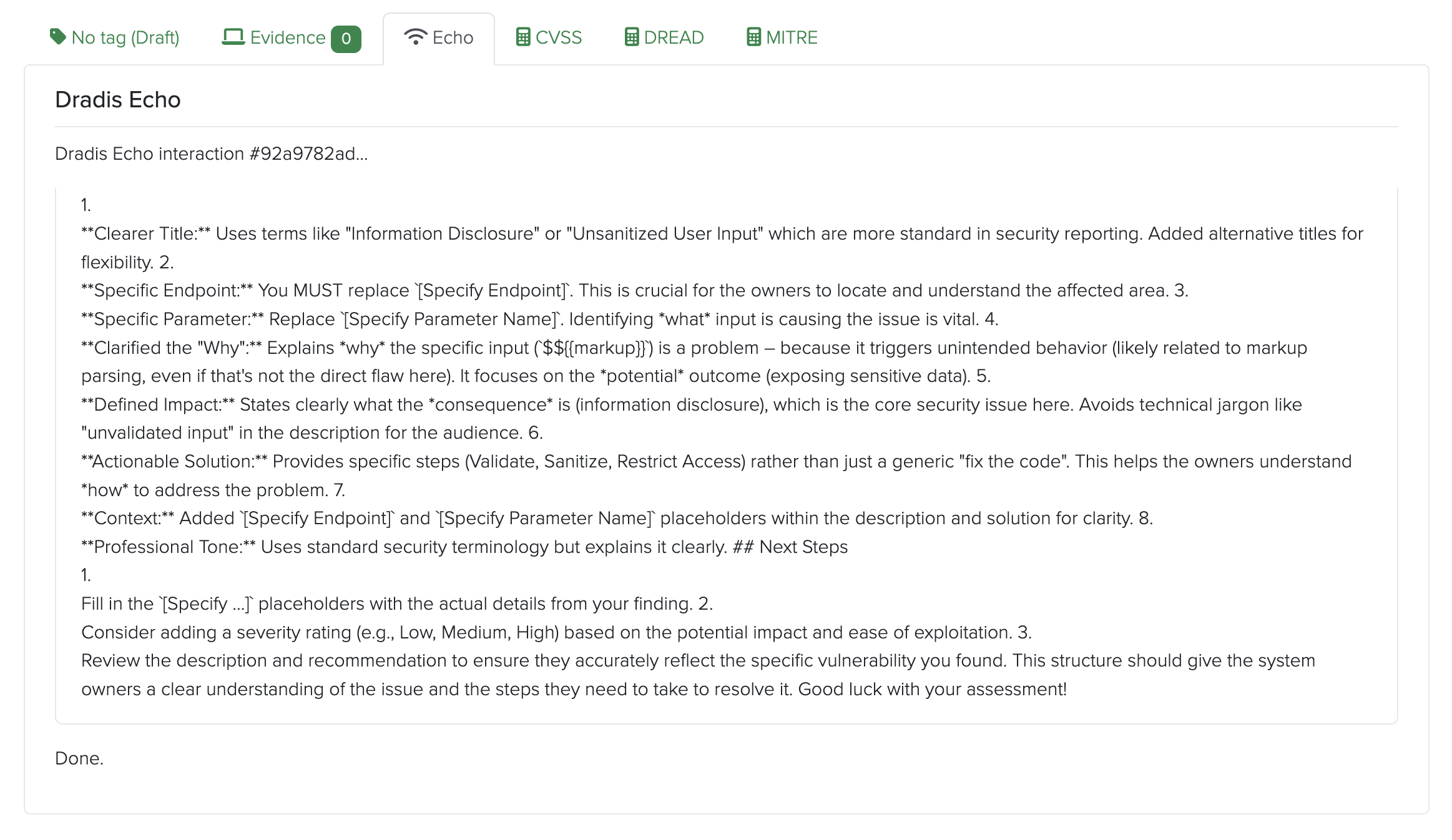

Echo generates contextual suggestions directly in Dradis - summarize raw scanner output, rewrite tester notes into executive language, or enhance brief remediation advice with detailed steps.

Echo understands your finding's severity, affected systems, and context.

Review the suggestion, edit as needed, and save. All without leaving your workflow or writing custom code.

Echo adapts to your writing standards and client expectations. Create context-driven prompts to:

Echo speeds up reporting, but you stay in control:

Combined with Dradis built-in Quality Assurance workflow to always deliver consistent results.

Turn raw scanner output or tester notes into polished executive summaries in seconds. Echo understands context and delivers relevant suggestions.

Expand brief remediation steps into detailed, client-ready guidance. Echo contextualizes your findings and fills in the details.

Generate technical versions for development teams and executive summaries for leadership - all from the same finding data.

Have Echo pull out CVSS scores, affected systems, or business impact from verbose notes, speeding up metadata entry.

Configure Echo prompts to use your approved severity levels, remediation language, and terminology - ensuring consistency across all reports.

Choose your processing model via Ollama and switch anytime. Echo works with any compatible model - you own your context engine, data, and workflow.

Echo is built on understanding context - where you are in your workflow, what you're working on, and what matters most in that moment.

Unlike generic tools, Echo knows:

Context-driven assistance means Echo delivers the right suggestion at the right moment - not generic output that requires heavy editing.

Local processing via Ollama is typically fast for finding summaries and rewrites. Latency depends on your server hardware and the processing model you choose.

Echo works with any model available on Ollama, including Llama 2, Mistral, Neural Chat, and others. You choose the model that best fits your performance and quality needs. Switch models anytime without changing your Dradis setup.

Echo is a productivity tool that speeds up writing and improves consistency - not a decision-maker. All suggestions require human review before saving. Echo delivers suggestions that you refine and approve.

Yes. You define and manage custom prompts in Dradis Echo tailored to your team's standards, audience, and use cases. Save and reuse prompts across your entire team. Echo adapts to your workflow and context.

Your email is kept private. We don't do the spam thing.