Note: this is a cross-post and can be found in the Dradis blog too.

Today I am pleased to announce Dradis Framework Professional Edition. Back in 2007 when I started the Dradis Framework project I could have not anticipated the success that it would had. Four years, 3,000 commits, 19,000 downloads and 19 releases later we are still making a difference for hundreds of security professionals (and aficionados) out there.

Dradis was announced in the 1st edition of MWRICON after many hours of late-night coding. Today we have three full committers, a small number of trusted partial committers and dozens of contributors. Dradis 2.0 was a big thing, and when Dradis was featured in the Offensive Security‘s Metasploit Unleashed it was even bigger and Russ McRee’s coverage for the toolsmith column of ISSA’s magazine and our own chapter in Grey Hat Hacking and being included in BackTrack since BT4 and the talks at DC4420 and DEFCON 17 and so many other articles and references.

It was encouraging that some people believed in the project from the beginning. I am grateful that my current employer (NGS Secure which was still called NGSSoftware when I joined) and my previous one (MWR InfoSecurity) let me carry on working on Dradis as my side project and even gave me time to continue improving the tool.

We have gone a long way… it was only matter of time that organizations whose consultants were already using Dradis approached me to get some help to further tailor Dradis to their needs. Some times this consisted on helping them with small tweaks they were making to the code, others it consisted in developing for them full-blown custom plugins to interconnect Dradis to their other systems or to produce reports in their particular format. That is why I started Security Roots Ltd in 2010.

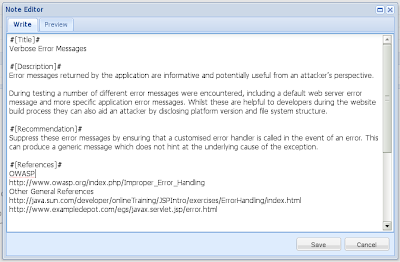

Dradis was started by a security consultant, with the security consultant’s needs and goals in mind (share information with the other teammates, portable, platform-independent, etc.). These are a subset of the needs and goals of the organization to which these consultants belong. The Technical Director of a security company understands the benefits of consultants using Dradis, but he needs more. He wants all his teams to work with Dradis in a standardized way. He wants everyone in the team to be able to use the latest version of Dradis without having to bother about upgrading and dependencies. He wants to be able to see how the different teams are doing, quickly check each team’s findings, maybe even extract some metrics or generate interim reports for clients with the critical issues already captured by the teams.

Enter Dradis Framework Professional Edition, a virtual appliance that leverages the advanced features of Dradis and extends it to enable multiple teams to work concurrently:

- It provides a centralized information repository:

- Information is always available: during the project and afterwards.

- Quickly inspect the project history or review the projects for a given user.

- Ideal for teams that work across multiple time zones.

- Hassle-free deployment: power up the virtual appliance and you and your team can start working and sharing information.

- The virtual appliance is easy to update and backup.

- Bundled with Vuln::DB, import issues to your Dradis projects from the central issue database.

I am thrilled about the prospect of making consultants’ lives ever easier, helping organizations to work more effectively and to make sure their clients receive the best value for money. Let the consultants focus on what they are good at and what they enjoy most: breaking things while we minimize the hassle associated with the back-end tasks required to coordinate their efforts.

This is a great opportunity to make a difference. Let’s make the most of it.

Daniel

Lead developer